Olist E-Commerce ELT Pipeline

1. Dataset Background

Olist is a Brazilian e-commerce marketplace that connects small businesses to major marketplaces, enabling them to sell their products online across Brazil.

This dataset includes 100,000+ orders placed between 2016 and 2018 with details including:

- Seller performance

- Customer profiles

- Product details

- Order timelines (purchase, payment, shipping)

- Reviews and ratings

- Geolocation (states and cities)

- Marketing channel performance

In this project, the focus is on seller dynamics to derive actionable insights.

2. Project Overview

This project builds a complete ELT pipeline:

- Extract: Raw CSV files are stored in Google Cloud Storage.

- Load: Airflow DAGs load them into BigQuery staging tables.

- Transform: dbt turns staging data into analytics-ready marts.

- Visualize: Metabase connects to BigQuery for dashboarding.

The infrastructure is managed with Docker and Terraform.

3. Getting Started

3.1 Clone Repository

git clone https://github.com/fachry-isl/olist-ecom-elt-pipeline.git

3.2 Cloud Service Provisioning

Google Application Credential

Follow this guide to create your credentials.

Save your credentials file to: ~/.google/credentials/google_credentials.json

Then set in variables.tf:

variable "credentials"{

description = "The path to the service account key file"

default = "~/.google/credentials/google_credentials.json"

}

variable "project" {

description = "Project"

default = "gcp-refresh-2025"

}

Terraform Apply

cd terraform

terraform apply

3.3 Setup Environment Variables

Edit docker-compose.yaml or create .env file:

GOOGLE_APPLICATION_CREDENTIALS=/.google/credentials/google_credentials.json

GCP_PROJECT_ID=gcp-refresh-2025

GCP_GCS_BUCKET=gcp-refresh-2025-olist-ecom

BIGQUERY_DATASET=olist_ecom_all

3.4 Build & Run Docker Container

docker-compose up --build

4. Tools Setup and Preview

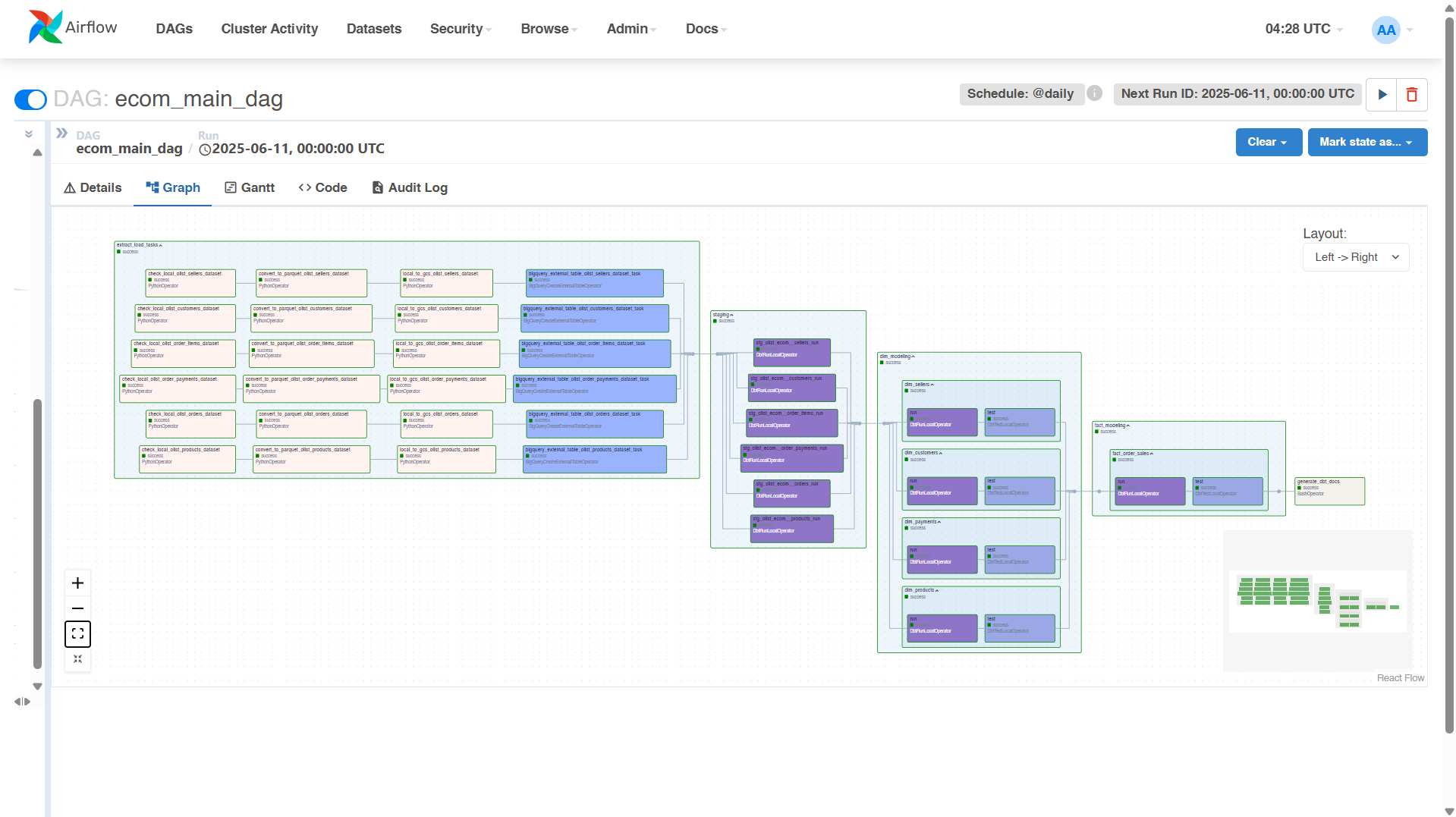

4.1 Airflow for ELT Pipeline

Airflow orchestrates:

- Extract from CSV to GCS

- Load from GCS to BigQuery staging

- Transform with DBT

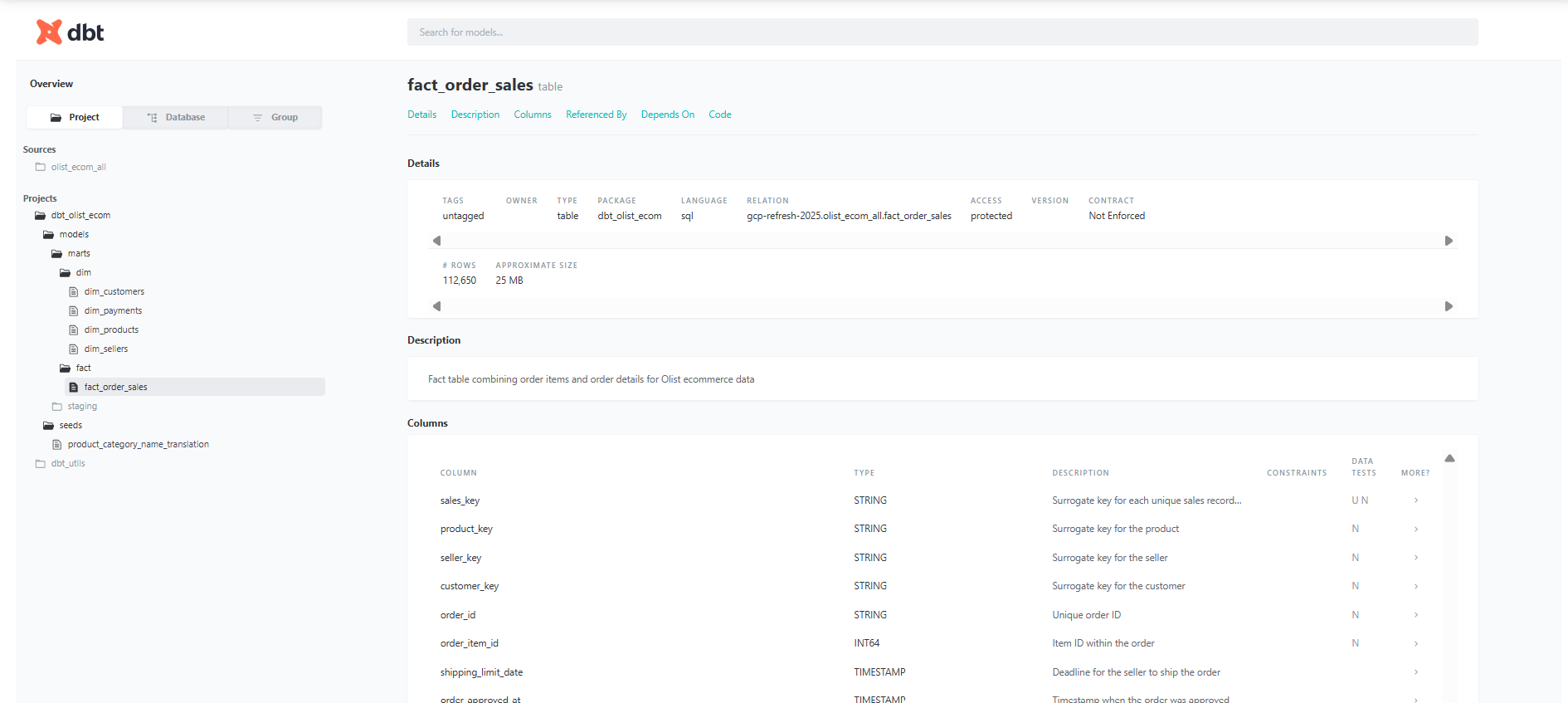

4.2 DBT with Cosmos in Airflow

DBT is used for transformations using star schema modeling.

- Fact and Dimension modeling

- Data quality checks (e.g. not null, unique)

- Documentation autogenerated

Cosmos integrates DBT inside Airflow DAGs.

You can access DBT docs from Airflow UI /dbt_docs.

4.3 Metabase for Visualization

Metabase connects to the final marts in BigQuery to build dashboards.

5. Key Insights

📊 Sales Performance Q3 2018

- Total Sales: $1.9M (↓ 37.95% QoQ, ↓ 23.39% YoY)

- Target Achievement: ~78% of $2.5M target

- Sales Trend: Peaked at $3.1M in Q2 2018, dipped in Q3

🛍️ Top Sellers & Categories

- Leading Categories: watches_gifts, cool_stuff, audio

- Consistent Sellers: Strong contributors to Q2/Q3 sales

📦 Product-Level Insights

- Most Ordered: Health & Beauty – 15% of all orders (50k+)

- Revenue Drops:

- watches_gifts: $311k → $192k

- bed_bath_table, sports_leisure also down

6. Summary

- End-to-end ELT pipeline deployed using Terraform, Docker, Airflow, DBT, and Metabase.

- Olist E-commerce dataset used to analyze seller and product dynamics.

- Key focus: identifying sales trends, seller performance, and product insights.

7. Recommendations

- Analyze seasonality to anticipate sales dips.

- Revisit underperforming categories post-Q2.

- Use seller/product insights to optimize listings.

Project by Fachry Ikhsal · 2025